The travel industry is dynamic, and client preferences and needs can change anytime. Market players in this sector must stay updated on customer choices and preferences, industry developments, and even the intricacies of their prior successes if they hope to grow over time.

Data is required to address the current difficulties that influence the tourism industry. Information facilitates the discovery of new routes, alternative modes of transportation, revised itineraries, and destinations. Notifying us of delays at rail or airport terminals and monitoring pricing trends are further benefits. By means of data scraping, we can collect information on our clients' preferences, emotions, and reviews.

What is Travel Fare Data Scraping?

Utilizing automated tools or programs to retrieve data from websites that compile and present information about travel fares, including airfare, lodging, and rental car costs, is known as "scraping" travel fare aggregator websites. Websites that aggregate travel fares gather and present rates from multiple sources so that customers may compare and select the best offers.

Scraping these platforms is mostly done to make things easier, such as comparing prices, analyzing data for business or research, and making custom tools for more individualized search results. However, web scraping must be done carefully, as it can be against the terms of service of the websites being scraped. Legal repercussions may result from unauthorized scraping. Thus, professionals should always put ethical and legal concerns first. They should also use approved APIs or request permission before employing any scraping at all.

Impact of Travel Fare APIs on the Industry

Travel Fare APIs have transformed the travel business by enhancing the overall experience. One advantage is better access to real-time and accurate travel fare information. These APIs enable organizations to combine current pricing data. They receive it from a variety of travel service companies and integrate it into their platforms. This makes pricing more transparent and efficient for consumers. It also allows businesses to make informed judgments based on market fluctuations and demand trends.

The use of Travel Fare APIs has made the travel industry more competitive. These APIs allow people to compare prices and services from different travel companies easily. As a result, customers can make smarter decisions. Also, this competition encourages travel companies to improve their services, customer support, and pricing to stay competitive in the market.

These apps can do things like plan personalized trips, help you stick to a budget, and even connect with loyalty programs. This brings in more money for these companies and encourages them to keep coming up with new and exciting ideas for the travel industry.

How Can Web Scraping Benefit the Travel Sector?

Web scraping, a technology that extracts website information, significantly benefits the travel sector. One of the key advantages lies in enhanced market analysis. By gathering data from different sources, travel businesses can gain valuable insights into customer preferences, market trends, and competitor strategies.

This information becomes crucial for making informed decisions and staying competitive in the dynamic travel industry.

Better Understanding of the Market

By collecting data from different places, travel companies can learn much about what customers like, what's trending in the market, and what other companies are doing. This helps them make smarter decisions and stay competitive.

Smart Pricing

Price scraping helps travel businesses keep an eye on prices across different platforms. This way, they can adjust their prices to attract more customers. It's like a real-time guide to set the best prices and make more money.

Creating Cool Stuff

Travel companies can use the scraped data to develop new and extraordinary services. For example, they can make personalized travel planners or tools that help people manage their budgets while traveling. This makes customers happy and brings in more money for the company.

Getting Rid of Boring Tasks

Scraping Travel data can automate repetitive tasks for travel businesses. This means they don't have to waste time on boring stuff and can focus on essential things. It's like having a helper that does the tedious work.

Major Challenges of Scraping Travel Fare Aggregator Websites

Travel fare aggregators can become important for every travel service provider. On the other hand, it has few challenges that must be encountered to succeed in the changing market scenario. Getting information about prices and availability from travel websites is expected, making it tricky.

Some of the challenges which make scraping of travel fare aggregator websites tricky are:

Anti-Scraping Procedures

These websites use techniques to prevent automated data collection. It resembles building obstacles or riddles to keep robots out. They can display CAPTCHA problems, obtain certain IP addresses, or set up traps to capture automated tools. This complicates the process of information gathering for scrapers.

Versatile Website Construction

These websites can modify their structure. They dynamically vary their appearance by using sophisticated approaches such as loading items only when requested (for example, loading extra flight options as you scroll down). This makes it difficult for typical scraping methods to extract the desired data consistently.

Rate-limiting and throttling

Consider a line at a shop where few patrons are allowed in at once. Similar to this, some websites set a limit on the pace at which scrapers can gather data. If a scraper tries to stop too quickly or with too much effort, they can do so temporarily or permanently.

Complicated Data Organization

The content on these web pages is scattered among multiple pages, similar to a jigsaw. It should be better arranged, so it's like trying to solve a tough jigsaw to obtain all the necessary pieces of knowledge in the right sequence. Scrapers have a lot of work; they must navigate multiple websites and deal with numerous data types.

Data Quality and Accuracy

Scrapers can collect data, but ensuring its accuracy and reliability is tough. These websites frequently change their pricing and information. As a result, information obtained from scraped data may only sometimes be accurate or up to date.

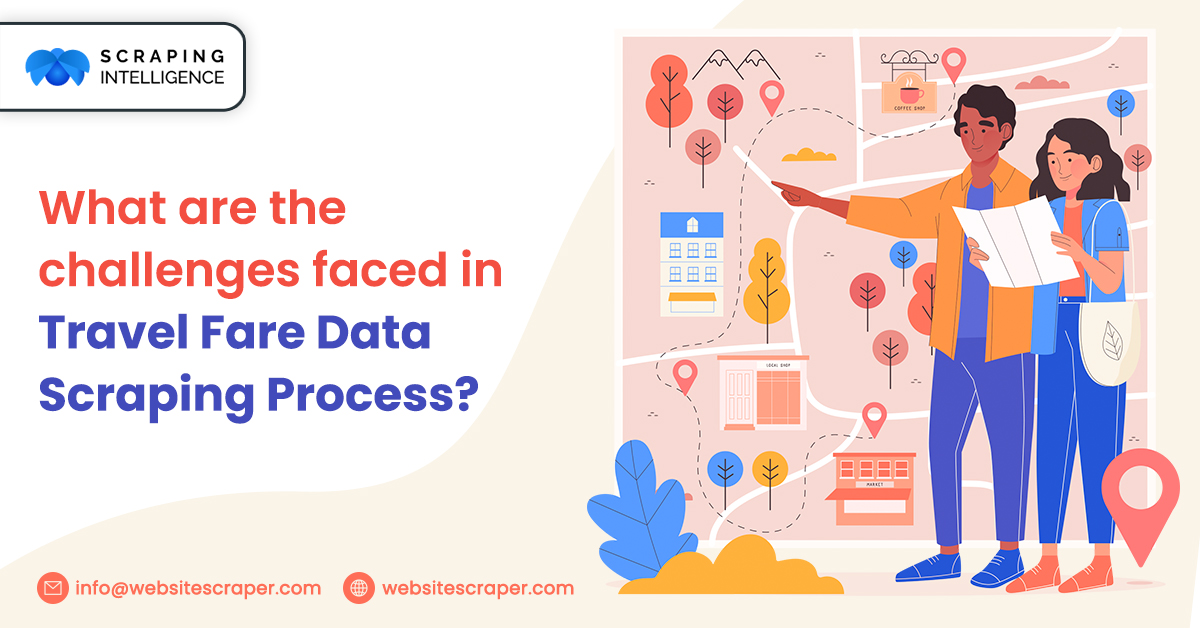

Strategies for Overcoming Challenges in Data Scraping from Travel Websites

Several things could be improved when it comes to scraping data from travel websites. Still, these may be addressed carefully to guarantee a smooth and moral data extraction process.

It is essential to overcome the challenges of travel fare scraping to improve the effectiveness and dependability of travel websites. While adhering to the website's standards and legal restrictions, the scraping efforts helps to expand rapidly.

Using Proxies and IP Rotation

Consider dressing differently each time you visit the same store. Similarly, proxies function by disguising your machine and altering its online image. Rotating IP addresses is similar to routinely changing these masks. This helps prevent website blocking and makes it more difficult for them to identify the scraper.

Browser Automation Tools

These programs, such as Selenium, function as magic wands for scrapers. They are able to manage complex features on the website that alter or emerge only when a person interacts with them since they replicate human activities on a web browser. This facilitates precise data acquisition, even from websites that utilize tech like JavaScript.

Monitoring and Adaptation

Scraping methods must be monitored in the same way as driving and responding to traffic signs are. Frequently updated webpages might cause issues in the scraping code.

Ethical Scraping

Everyone must follow the guidelines. It resembles being a considerate visitor in someone's home. Website rules are frequently documented in the terms of service or a robots.txt file. Respecting these standards, such as not scraping in banned areas and stopping between searches, keeps things operating smoothly and prevents difficulties with the website.

Data Validation and Cleaning

This stage might be compared to sifting through an unorganized stack of documents to locate the pertinent ones. The data has to be verified after scraping. It can sometimes be inaccurate, organized, or error-free. Verifying and cleaning it entails checking for accuracy and addressing any mistakes. Because of this, the data scraped is trustworthy and dependable.

Proxies and Geolocation

Use diverse proxies from different places. This will help you overcome IP-based restrictions. When dealing with proxies and geolocation, it's essential to employ a variety of proxies that originate from different locations. This approach serves to overcome any restrictions based on IP addresses. The key is to ensure the proxies are dependable and regularly rotated. By doing so, their use remains discreet and allows access from many regions. In simpler terms, using a mix of reliable proxies from various places helps you get around restrictions tied to your internet address, and by switching them out regularly, you can maintain anonymity and access content from different parts of the world.

Conclusion

Fare scraping is a technology used to extract data from websites. Travel firms can benefit from a web scraper API provided by Scraping Intelligence. This tool can easily talk to travel websites and figure out if there are any changes to the webpage. It helps travel firms get the data they need smoothly.

Travel agencies can leverage the power of scraping travel fare to gather crucial information that can help them meet their objectives. By using web scraping in tandem with proxies, travel firms can conceal their IP addresses and locations, which can help them bypass IP limitations and geo-restrictions. With web scraping, travel agencies can offer their clients precise, up-to-date, and valuable travel information, ensuring that their operations run smoothly with no downtime.

Incredible Solutions After Consultation

- → Industry Specific Expert Opinion

- → Assistance in Data-Driven Decision Making

- → Insights Through Data Analysis