Introduction

The project entails developing a real-time web application that collects data from multiple newspapers and displays a summary of various subjects mentioned in the news items.

This is accomplished through the use of a Machine Learning algorithm classification task that can forecast the category of a particular news article, a web scraping approach for obtaining the latest headlines from papers, and an active web service that displays the findings to the user.

The entire procedure is divided into three main parts:

- Training for classification models (link)

- Web scraping of news articles (this post)

- App development and distribution (link)

We’ll write a script that scrapes the most recent news stories from several newspapers and saves the text, which will be put into the model for a category prediction. We’ll go over it in the steps below:

- A quick overview of web pages and HTML

- Python web scraping with BeautifulSoup

1. A Quick Overview of the Web Page and HTML

The first step in extracting news stories (or any other type of material) from a website is to understand how it operates.

When we enter a URL into a web browser (such as Google Chrome, Firefox, or Internet Explorer) and view it, we are seeing a combination of three technologies:

The standard language for adding material to a webpage is HTML (HyperText Markup Language). It enables us to add text, graphics, and other content to our website. HTML, in an essence, determines the content of a website.

CSS (Cascading Style Sheets) is a programming language that allows us to customize the appearance of a website. It’s worth noting that they are all programming languages. They will enable us to design and alter every part of a webpage’s appearance.

However, if we want a website to be available to anyone in a web page, we’ll have to learn more about things like setting up a web server and using a specific domain, among other things. However, because we are only interested in collecting material from a website today, this is enough.

Let’s use an example to demonstrate these topics.

We’d get something like this if we removed the CSS content from the webpage:

And we wouldn’t be able to use this pop-up if we deactivated JavaScript:

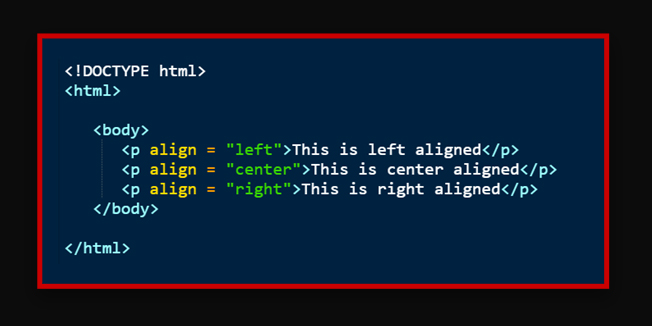

There are multiple kinds of components, each with its unique set of characteristics. Tags are used to identify an element (for example, to determine whether some text is a heading or a paragraph). The > symbols (for example, a) are used to represent these tags.

Tag denotes that a piece of text is being used as a paragraph).

For instance, the HTML code below allows us to adjust the paragraph alignment:

As a result, users will be able to locate the content and its characteristics in the HTML code when they visit a website.

We’re ready to do some site scraping now that we’ve exposed these principles.

2. Web Scraping using BeautifulSoup in Python

Python has a several libraries that enable us to scrape data from websites. BeautifulSoup is one of the most popular ones.

BeautifulSoup enables us to analyze the HTML content of a URL and retrieve its elements by detecting their tags and attributes. As a result, we’ll use it to extract certain text from the document.

It’s a simple-to-use program with a lot of capability. We will be able to extract any content from the web with about 3–5 lines of code.

Please type the following script into your Python installation to activate it:

! pip install beautifulsoup4

We’ll also have to import the queries module to give BeautifulSoup the HTML code of any page. Please type: to install it if it isn’t already included in your Python distribution.

! pip install requests

We’ll get the HTML code from the site with the request’s module, then browse through it with the BeautifulSoup package. We’ll learn two instructions that will suffice for our needs:

find_all(element tag, attribute): It enables us to identify any HTML element on a page by displaying its tag and characteristics. This function will find all items that are of the same kind. Instead, we can use find()to get only the first one.

get_text(): This command will allow us to retrieve the text from a specific element after it has been discovered.

So, at this stage, we must traverse through our webpage’s HTML code (for example, in Chrome Browser, we must open the webpage, press the right-click button, and select See code) and find the elements we want to crawl. Once we’re in the source code, we can simply use Ctrl+F or Cmd+F to search.

Once we’ve found the elements of relevance, we’ll use the requests module to acquire the HTML code and BeautifulSoup to extract those elements.

We shall use the English daily El Pais as an example. We’ll try web scraping the titles of news stories from the front page first, then extracting the text.

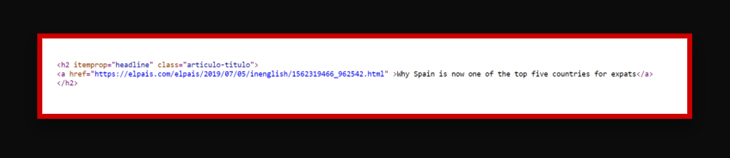

To find the news articles, we must first enter the website and study the HTML code. After a glance, we can notice that each article on the front page contains the following elements:

The header is a <h2> (heading-2) element with the attributes itemprop=”headline”and class=”articulo-titulo”. The text is contained in an <a> element with a hrefattribute. To retrieve the text, we’ll need to code the following commands:

# importing the necessary packages import requests from bs4 import BeautifulSoup

We can get the HTML content using the requests module and save it to the coverpage variable:

r1 = requests.get(url) coverpage = r1.content

For BeautifulSoup to work, we must first make a soup:

soup1 = BeautifulSoup(coverpage, 'html5lib')

Finally, we’ll be able to find the elements we’re seeking for:

coverpage_news = soup1.find_all('h2', class_='articulo-titulo')

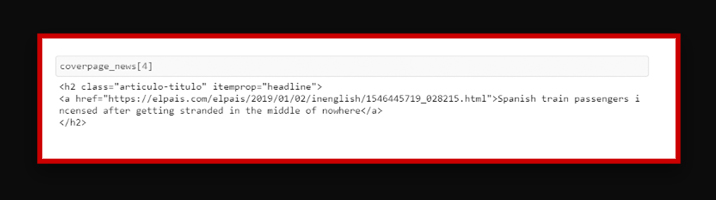

Because we’re retrieving all occurrences with find all, this will return a list with each element being a news article:

We will be able to extract the text if we code the following command:

coverpage_news[4].get_text()

We can type the following to obtain the value of a specific (in this example, the link):

coverpage_news[4]['href']

We’ll also get a plain text version of the URL.

If you’ve followed the instructions to this point, you’re ready to web scrape any content.

The next stage would be to use the href attribute to obtain the content of each news article, then retrieve the source code and discover the paragraphs in the HTML code to get them with BeautifulSoup. It’s the same concept as previously, but this time we need to find the tags and properties that identify the content of the news article.

The following is the complete process code. I’ll show the script, but not in the same depth as before because the concept is the same.

# Scraping the first 5 articles

number_of_articles = 5# Empty lists for content, links and titles

news_contents = []

list_links = []

list_titles = []

for n in np.arange(0, number_of_articles):

# only news articles (there are also albums and other things)

if "inenglish" not in coverpage_news[n].find('a')['href']:

continue

# Getting the link of the article

link = coverpage_news[n].find('a')['href']

list_links.append(link)

# Getting the title

title = coverpage_news[n].find('a').get_text()

list_titles.append(title)

# Reading the content (it is divided in paragraphs)

article = requests.get(link)

article_content = article.content

soup_article = BeautifulSoup(article_content, 'html5lib')

body = soup_article.find_all('div', class_='articulo-cuerpo')

x = body[0].find_all('p')

# Unifying the paragraphs

list_paragraphs = []

for p in np.arange(0, len(x)):

paragraph = x[p].get_text()

list_paragraphs.append(paragraph)

final_article = " ".join(list_paragraphs)

news_contents.append(final_article)

It’s vital to note that this code is only applicable to this specific webpage. If we crawl another, we should anticipate distinct tags and attributes to be used to identify elements. However, once we’ve figured out how to spot them, the procedure is the same.

We can now extract content from a variety of news stories. The final step is to utilize the Machine Learning model we trained in the previous post to forecast the categories of data and present a summary to the user. This will be discussed in detail in the series’ last post.

Looking for scraping news articles from CNN? Contact us or request for a free quote!!