There are many circumstances in which a developer may be required to interact with Google (Map) Reviews. Everyone familiar with the Google My Business API is aware that an account ID is required for each location (business) to collect reviews using the Google Review Scraper. Scraping Google reviews can be useful in situations when a developer wants to work with evaluations from different sites (or does not have permission to a Google Business account).

This blog will show you the use of Selenium and BeautifulSoup to collect Google Reviews.

What is Web Scraping?

Web scraping is the act of obtaining information from the web. Various libraries may assist you with this, including:

- Beautiful Soup: An excellent tool for parsing the DOM, it simply extracts info from HTML and XML files.

- Scrapy is a free and open-source tool for scraping huge databases at scale.

- Selenium: Browser automation for dealing with JavaScript in sophisticated scraping applications (clicks, scrolls, filling in forms, drag and drop, moving between windows and frames, etc.)

We'll use Selenium to traverse the page, upload more material, and then parse the HTML file with Beautiful Soup.

The Most Effective Monitoring Tools

Selenium

Selenium may be installed using pip or conda (package methods):

#Installing with pip pip install selenium#Installing with conda install -c conda-forge selenium

To communicate with the particular browser, Selenium will require a driver.

BeautifulSoup

BeautifulSoup will be used to parse the Html file and retrieve the information we need. (In our case, review text, reviewer, date, and so forth)

BeautifulSoup must be installed.

#Installing with pip pip install beautifulsoup4#Installing with conda conda install -c anaconda beautifulsoup4

Web Driver Initialization & URL Input

Import and initialize the web driver to get started. Then, using the get function, we will give the Google Maps URL of the place for which we want the reviews:

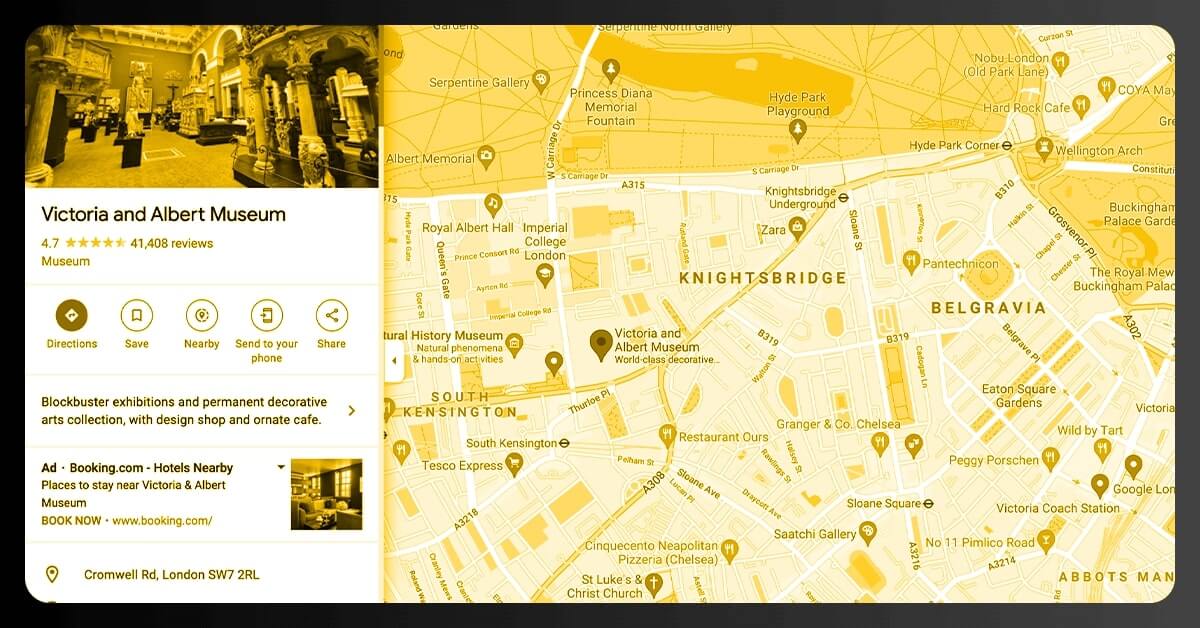

from selenium import webdriver driver = webdriver.Chrome()#London Victoria & Albert Museum URL url = 'https://www.google.com/maps/place/Victoria+and+Albert+Museum/@51.4966392,-0.17218,15z/data=!4m5!3m4!1s0x0:0x9eb7094dfdcd651f!8m2!3d51.4966392!4d-0.17218' driver.get(url)

Navigating

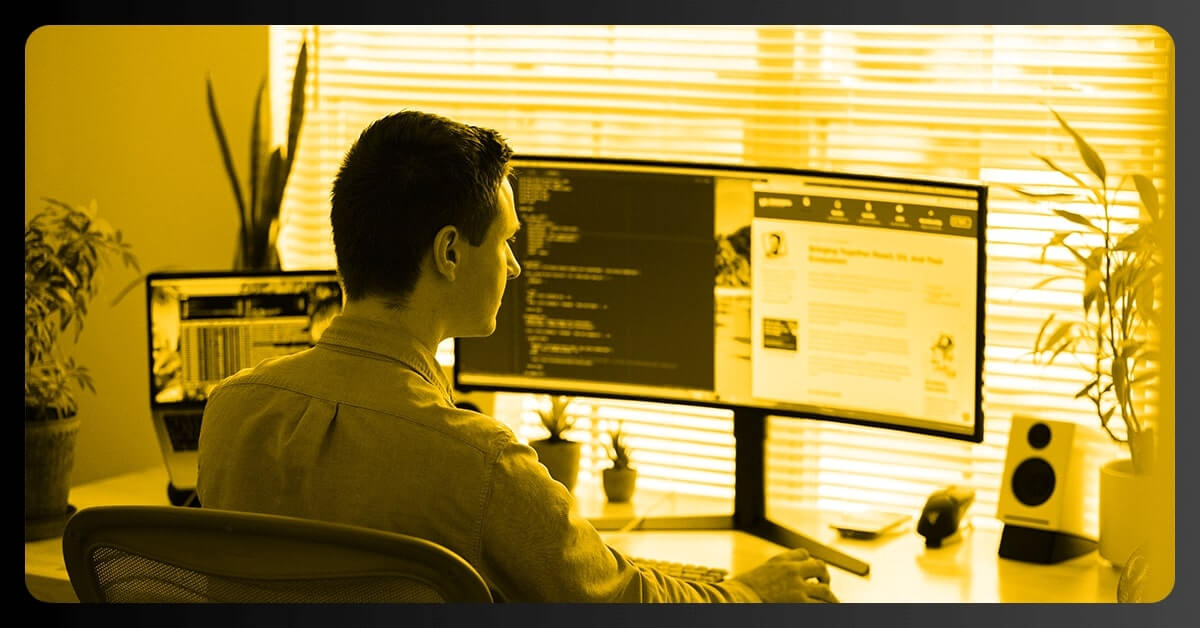

The web driver will mostly certainly encounter the consent Google page to accept to cookies before proceeding to the real web destination we provided through URL variable. If that's the situation, we may proceed by clicking the "I agree" option.

To copy the XPath, click inspect anywhere on the page, and then click inspect again on the "I agree" button once the source code frame appears on the right side. Copy XPath from the copy choices by right-clicking (or double-clicking) on the code.

To explore the website, Selenium provides numerous ways; in this case, I used find_element_by_xpath():

driver.find_element_by_xpath('//*[@id="yDmH0d"]/c-wiz/div/div/div/div[2]/div[1]/div[4]/form/div[1]/div/button').click()#to make sure content is fully loaded we can use time.sleep() after navigating to each page

import time

time.sleep(3)

On Google Maps, there are a few various sorts of profile pages, and on many instances, the location will be presented alongside a lot of other locations listed on the left side, and there will almost certainly be some adverts displayed at the top of the list. The following url, for example, would link us to this page:

url = 'https://www.google.com/maps/search/bicycle+store/@51.5026862,-0.1430242,13z/data=!3m1!4b1'

To avoid being caught on these many sorts of layouts or loading the incorrect page, we will construct an error handler code and move to load reviews.

try:

driver.find_element(By.CLASS_NAME, "widget-pane-link").click()

except Exception:

response = BeautifulSoup(driver.page_source, 'html.parser')

# Check if there are any paid ads and avoid them

if response.find_all('span', {'class': 'ARktye-badge'}):

ad_count = len(response.find_all('span', {'class': 'ARktye-badge'}))

li = driver.find_elements(By.CLASS_NAME, "a4gq8e-aVTXAb-haAclf-jRmmHf-hSRGPd")

li[ad_count].click()

else:

driver.find_element(By.CLASS_NAME, "a4gq8e-aVTXAb-haAclf-jRmmHf-hSRGPd").click()

time.sleep(5)

driver.find_element(By.CLASS_NAME, "widget-pane-link").click()

The code above does the following steps:

- Search for the reviews button by class name (the driver landed on a page in the first example) and click it.

- If the HTML is not parsed using BeautifulSoup, verify the page's contents.

- If the answer (thus the page) contains paid advertisements, count the advertisements, skip, and browse to the Google Maps profile page for the target location; otherwise, travel to the Google Maps profile page for the target location.

- Display reviews (by clicking the reviews button — class name=widget-pane-link)

Scrolling and Loading Reviews

This should lead us to the website in which the reviews may be found. However, the first loading would only deliver ten reviews, with another ten added with subsequent scroll. To retrieve all of the reviews for the location, we will calculate how many times we need to scroll and then utilize the chrome driver's execute¬_script() method.

#Find the total number of reviews

total_number_of_reviews = driver.find_element_by_xpath('//*[@id="pane"]/div/div[1]/div/div/div[2]/div[2]/div/div[2]/div[2]').text.split(" ")[0]

total_number_of_reviews = int(total_number_of_reviews.replace(',','')) if ',' in total_number_of_reviews else int(total_number_of_reviews)#Find scroll layout

scrollable_div = driver.find_element_by_xpath('//*[@id="pane"]/div/div[1]/div/div/div[2]')#Scroll as many times as necessary to load all reviews

for i in range(0,(round(total_number_of_reviews/10 - 1))):

driver.execute_script('arguments[0].scrollTop = arguments[0].scrollHeight',

scrollable_div)

time.sleep(1)

The scrollable element is found first within the for loop above. The scroll bar is initially located at the top, indicating that the vertical location is 0. We change the scroll element’s (scrollable div) vertical position(.scrollTop) to its height by giving a simple JavaScript snippet to the chrome driver (driver.execute script) (.scrollHeight). We are dragging the scroll bar from vertical position 0 to vertical position Y. (Y = whatever height you have)

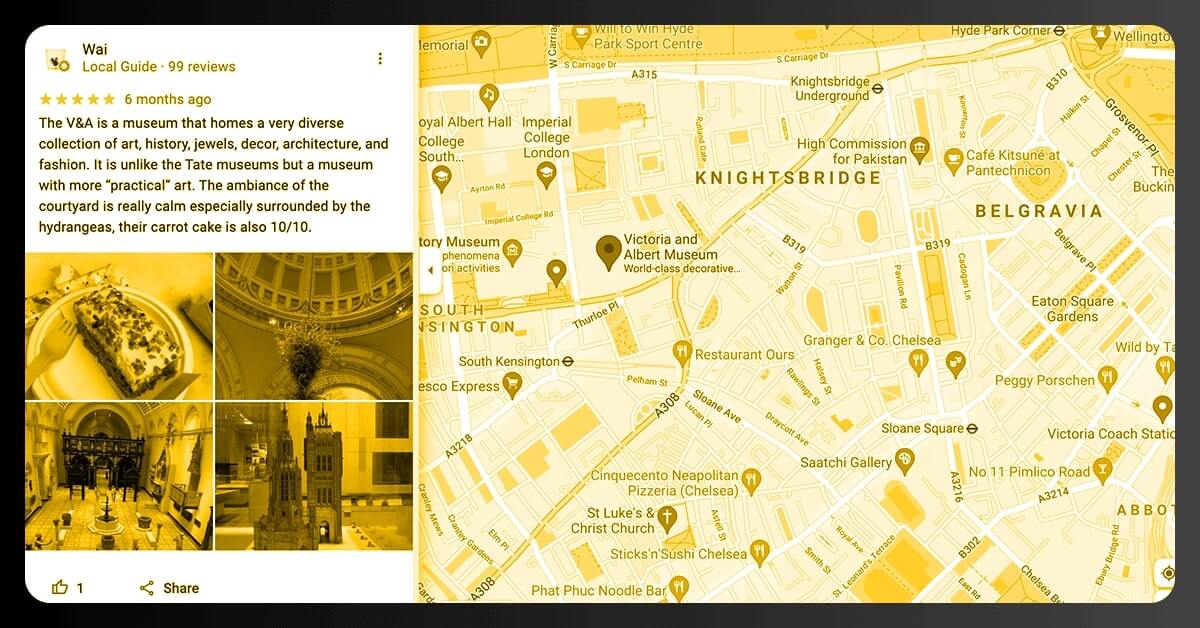

We can now parse them and get the information we want. (Reviewer, Review Text, Review Rate, Review Sentiment, and so on.) Simply discover the similar class name of the outer item displayed inside the scroll layout, which holds all the data about individual reviews, and use it to extract a list of reviews.

Parsing HTML and Data Extraction

response = BeautifulSoup(driver.page_source, 'html.parser')

reviews = response.find_all('div', class_='ODSEW-ShBeI NIyLF-haAclf gm2-body-2')

We can now construct a method to extract useful data from the reviews result set generated by HTML parsing. The code below would accept the answer set and provide a Pandas DataFrame with pertinent extracted data in the Review Rate, Review Time, and Review Text columns.

def get_review_summary(result_set):

rev_dict = {'Review Rate': [],

'Review Time': [],

'Review Text' : []}

for result in result_set:

review_rate = result.find('span', class_='ODSEW-ShBeI-H1e3jb')["aria-label"]

review_time = result.find('span',class_='ODSEW-ShBeI-RgZmSc-date').text

review_text = result.find('span',class_='ODSEW-ShBeI-text').text

rev_dict['Review Rate'].append(review_rate)

rev_dict['Review Time'].append(review_time)

rev_dict['Review Text'].append(review_text)

import pandas as pd

return(pd.DataFrame(rev_dict))

This blog provided a basic overview of web scraping google reviews using Python as well as a simple example. Data sourcing might necessitate the use of complicated data collection methods, and it can be a time-consuming and costly task if the proper tools are not used.

If you are looking for web scraping services or extracting Google Reviews using Selenium and BeautifulSoup, contact Scraping Intelligence today or request for a quote!