Walmart is one of the most well-known retailers. It operates an online store as well as physical locations throughout the world. Walmart not only controls the retail industry with a wide range of products and net sales of $519.93 billion USD, but it also has a wealth of data that can be used to acquire insights into customer behavior, product portfolios, and even market conditions.

We’ll scrape Walmart product Data from Walmart.com and save it in a SQL Server database in this blog. We will extract Walmart Product Price Data using Python. BeautifulSoup is the software that was employed for this scraping experiment. We also used Selenium, a library that enables us to interface with the Google Chrome browser.

Scraping Walmart Product Data

Importing all required libraries is the initial step. We started by setting up the scraper’s flow after we got the packages imported. We started by looking at the structure of the URLs on Walmart.com’s product pages to see how we could modularize our code. A URL, or Uniform Resource Locator, is the location of the new website to which a user is pointing which can be used to distinguish a website uniquely.

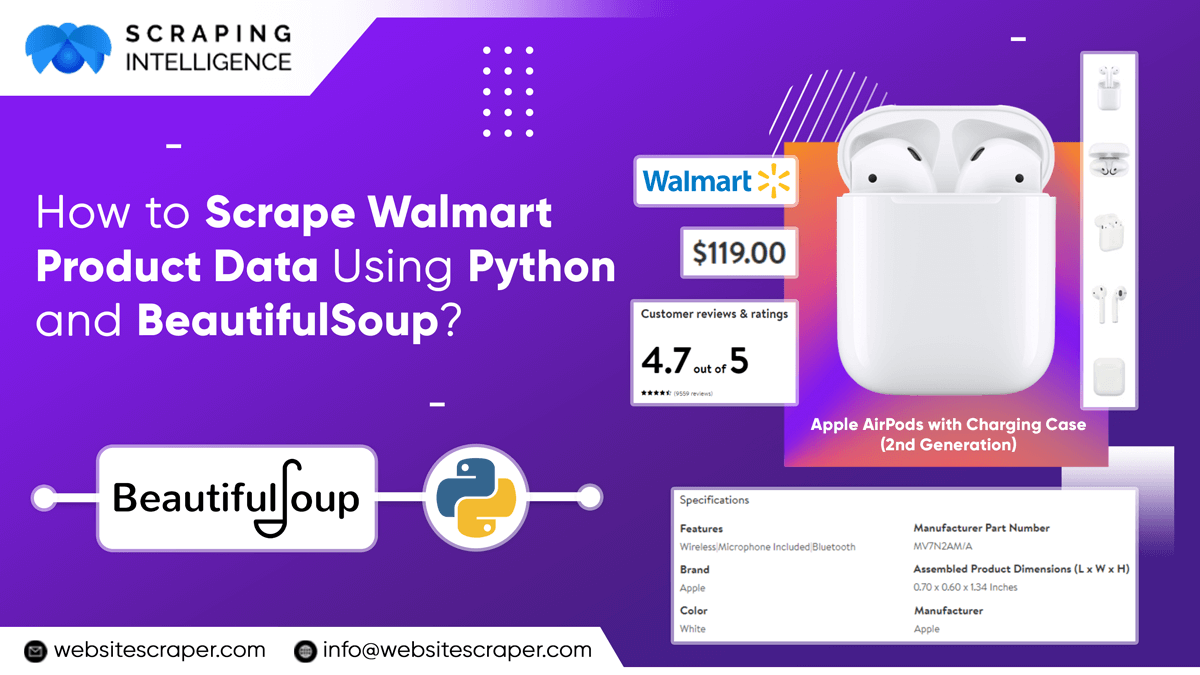

We’ve built a table of URLs for sites in Walmart.com’s electronics department in the example above. We’ve also compiled a list (technically referred to as an array) of the product categories’ names. We’ll use them to name our datasets and tables later.

For each main product category, you can add or delete many more subcategories as you wish. All you have to do is go to the subcategory sites and copy the page’s URL. This location is shared by all of the products on that page. You can repeat this process for as many product categories as you like. For our demonstration, we’ve added categories like Food and Toys to the graphic below.

Keep in mind that we have put the Links in a list because this makes data processing in Python easier. We now can move onto the building scraper once we have these lists ready.

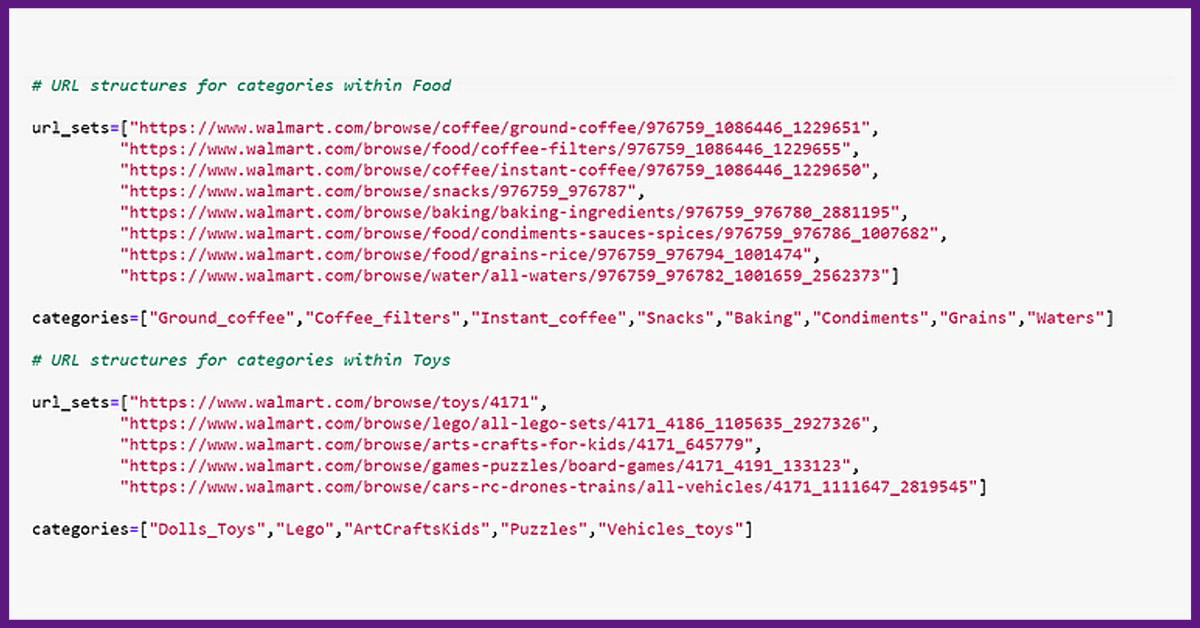

To automate the scraping process, we constructed a loop. We can, however, run it for just one category and subcategory if necessary. Assume we just want to scrape data for a single sub-category, such as TVs in the ‘Electronics’ category. We’ll teach you how to scale the code up for all of the sub-categories later.

We next loop through the top n array 10 times to view the product descriptions and capture the entire web page structure as HTML code. This is analogous to inspecting and copying the HTML code from a web page’s elements (by right-clicking on the page and selecting ‘Inspect Element’). However, we’ve implemented a restriction that only the HTML structure contained within the ‘Body’ tag is retrieved and saved as an object. Because the necessary product details is only found in the HTML body of the page, this is the case.

This class may well be used to retrieve relevant product information for many of the items mentioned on the active page. To do so, we discovered that the product information is contained

within a ‘div’ element with the class’-search-result-gridview-item-wrapper.’ As a result, we employed the find all function to extract all such instances of the above class in the next step.

This data was saved in a temporary object called ‘codelist.’

We’ve now created the URLs for each product. In order to do so, we noticed that all brands begin with the string ‘https://walmart.com/ip’. Only before this string were any unique-identifiers added. The string values retrieved from the’search-result-gridview-item-wrapper’ items stored above were used as the unique identifier. So, in the next step, we cycled through the temporary object code list to build the full URL for a specific product’s website.

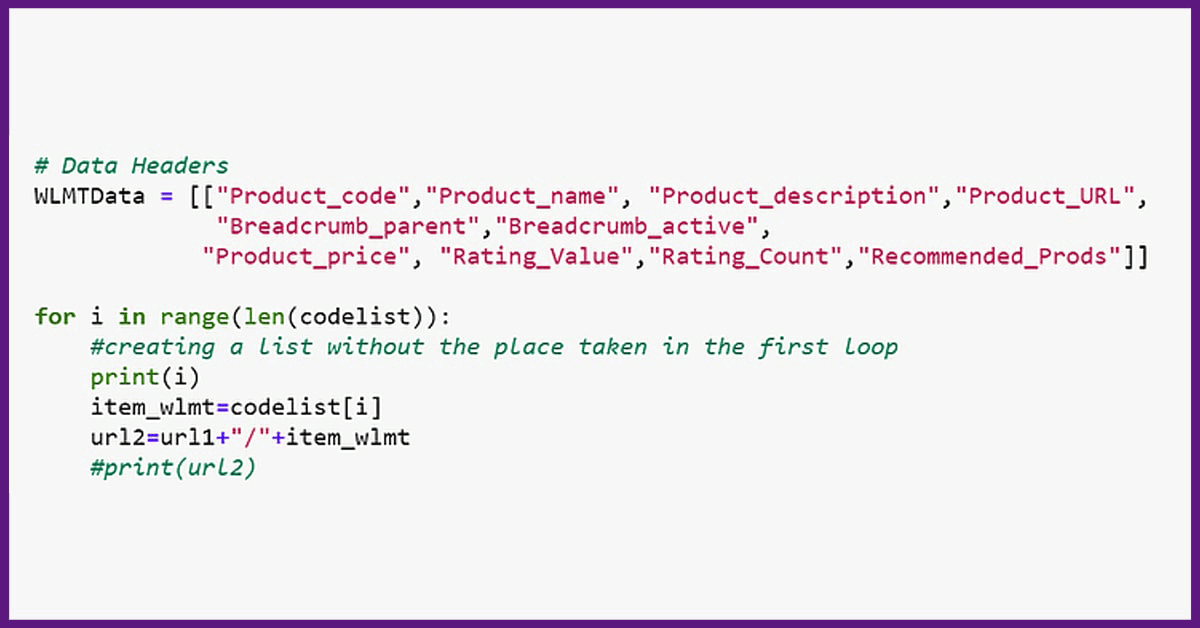

We would indeed be able to get precise information about the product using this URL. For this demonstration, we’re going to pull data such as the unique Product code, Product name, Product description, URL of the product page, name of parent page category in which the product is placed (parent breadcrumb), name of active sub group in which the item is positioned on the website (active breadcrumb), Product price, rating (in terms of stars), number of ratings or reviews for the product, and other similar products suggested on Walmart’s website similar or similar. You can change the order of the items on this list to suit your needs.

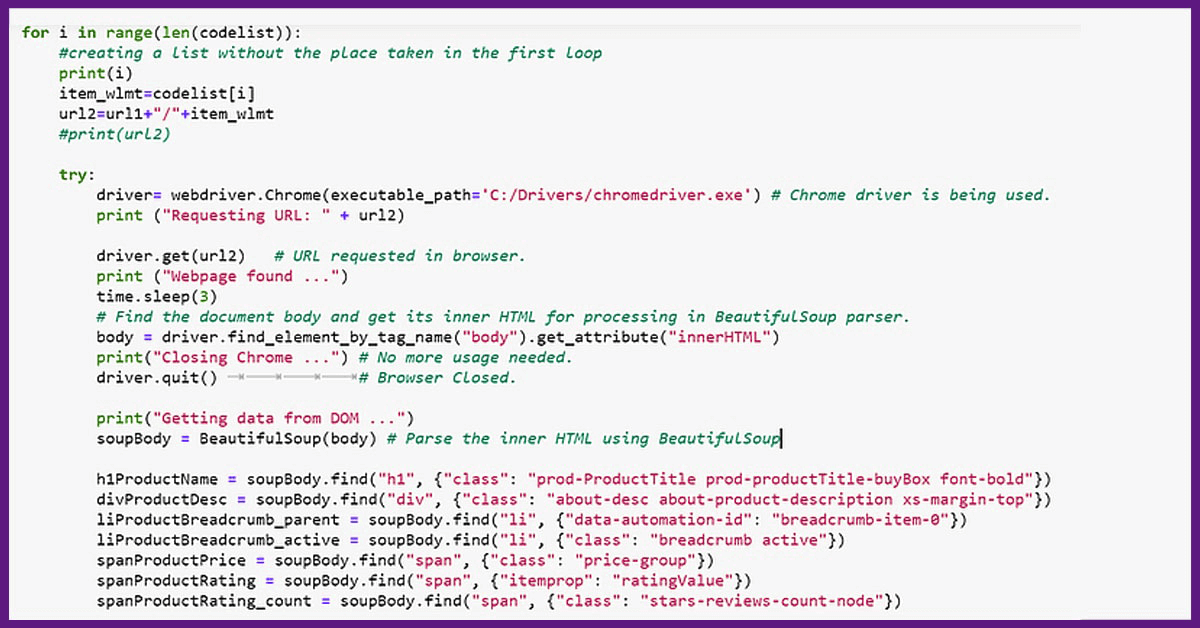

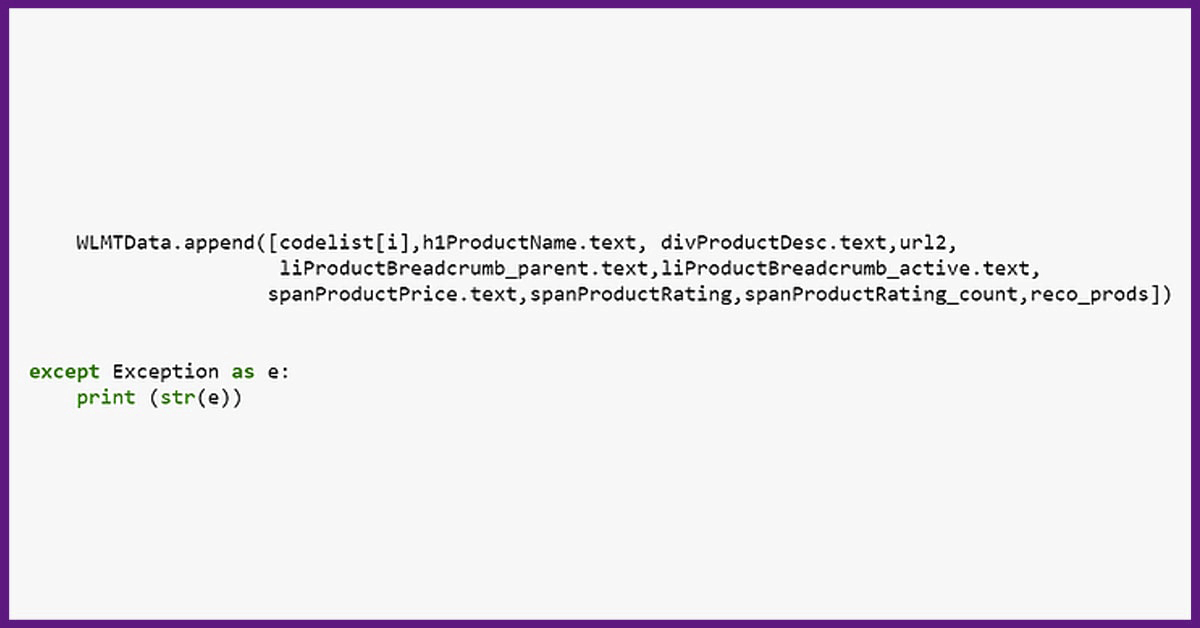

Following the given script, the next step is to open the different product webpage using the created URL and retrieve the product attributes as listed above. The scraper’s next step would be to attach all of this information for all of the goods in a subcategory in a single data frame once you’re comfortable with the list of attributes being pulled in the code. That’s what the code below accomplishes for you.

All of the information for the products on the top 10 pages of the specified subcategory will be in the data frame ‘df’ in your code. You could also export the data to a SQL database or save it as a CSV file. You may use the following code to export it to a MySQL database in a table called ‘product info’:

You’ll must provide passwords for your Sql server database, and then Python will allow you to link your workplace environment directly to the database, allowing you to push your information as a SQL dataset. If a table with the same name already appears in the code above, the present code will overwrite it. To avoid this, you may always alter the script. You can choose to ‘fail,”replace,’ or ‘add’ data here in Python.

This is a simple code structure that may be altered to include warnings for missing data or slow-loading pages. The whole code will look like this if you choose to loop this code for many subcategories:

import os

import selenium.webdriver

import csv

import time

import pandas as pd from selenium

import webdriver from bs4

import BeautifulSoup

url_sets=["https://www.walmart.com/browse/tv-video/all-tvs/3944_1060825_447913", "https://www.walmart.com/browse/computers/desktop-computers/3944_3951_132982",

"https://www.walmart.com/browse/electronics/all-laptop-computers/3944_3951_1089430_132960", "https://www.walmart.com/browse/prepaid-phones/1105910_4527935_1072335",

"https://www.walmart.com/browse/electronics/portable-audio/3944_96469", "https://www.walmart.com/browse/electronics/gps-navigation/3944_538883/",

"https://www.walmart.com/browse/electronics/sound-bars/3944_77622_8375901_1230415_1107398", "https://www.walmart.com/browse/electronics/digital-slr-cameras/3944_133277_1096663",

"https://www.walmart.com/browse/electronics/ipad-tablets/3944_1078524"]

categories=["TVs","Desktops","Laptops","Prepaid_phones","Audio","GPS","soundbars","cameras","tablets"]

# scraper for pg in range(len(url_sets)):

# number of pages per category top_n= ["1","2","3","4","5","6","7","8","9","10"]

# extract page number within sub-category url_category=url_sets[pg]

print("Category:",categories[pg])

final_results = []

for i_1 in range(len(top_n)): print("Page number within category:",i_1) url_cat=url_category+"?page="+top_n[i_1] driver= webdriver.Chrome(executable_path='C:/Drivers/chromedriver.exe') driver.get(url_cat) body_cat = driver.find_element_by_tag_name("body").get_attribute("innerHTML") driver.quit() soupBody_cat = BeautifulSoup(body_cat) for tmp in soupBody_cat.find_all('div', {'class':'search-result-gridview-item-wrapper'}): final_results.append(tmp['data-id'])

# save final set of results as a list codelist=list(set(final_results))

print("Total number of prods:",len(codelist)) # base URL for product page url1= "https://walmart.com/ip"

# Data Headers WLMTData = [["Product_code","Product_name","Product_description","Product_URL", "Breadcrumb_parent","Breadcrumb_active","Product_price", "Rating_Value","Rating_Count","Recommended_Prods"]]

for i in range(len(codelist)):

#creating a list without the place taken in the first loop print(i) item_wlmt=codelist[i] url2=url1+"/"+item_wlmt

#print(url2) try: driver= webdriver.Chrome(executable_path='C:/Drivers/chromedriver.exe')

# Chrome driver is being used. print ("Requesting URL: " + url2) driver.get(url2)

# URL requested in browser. print ("Webpage found ...") time.sleep(3)

# Find the document body and get its inner HTML for processing in BeautifulSoup parser. body = driver.find_element_by_tag_name("body").get_attribute("innerHTML") print("Closing Chrome ...")

# No more usage needed. driver.quit()

# Browser Closed. print("Getting data from DOM ...") soupBody = BeautifulSoup(body)

# Parse the inner HTML using BeautifulSoup h1ProductName = soupBody.find("h1", {"class": "prod-ProductTitle prod-productTitle-buyBox font-bold"})

divProductDesc = soupBody.find("div", {"class": "about-desc about-product-description xs-margin-top"}) liProductBreadcrumb_parent = soupBody.find("li", {"data-automation-id": "breadcrumb-item-0"}) liProductBreadcrumb_active = soupBody.find("li", {"class": "breadcrumb active"}) spanProductPrice = soupBody.find("span", {"class": "price-group"}) spanProductRating = soupBody.find("span", {"itemprop": "ratingValue"}) spanProductRating_count = soupBody.find("span", {"class": "stars-reviews-count-node"}) ################# exceptions #########################

if divProductDesc is None: divProductDesc="Not Available" else: divProductDesc=divProductDesc if liProductBreadcrumb_parent is None: liProductBreadcrumb_parent="Not Available" else: liProductBreadcrumb_parent=liProductBreadcrumb_parent

if liProductBreadcrumb_active is None: liProductBreadcrumb_active="Not Available" else: liProductBreadcrumb_active=liProductBreadcrumb_active if spanProductPrice is None: spanProductPrice="NA" else: spanProductPrice=spanProductPrice

if spanProductRating is None or spanProductRating_count is None: spanProductRating=0.0 spanProductRating_count="0 ratings" else: spanProductRating=spanProductRating.text spanProductRating_count=spanProductRating_count.text

### Recommended Products reco_prods=[] for tmp in soupBody.find_all('a', {'class':'tile-link-overlay u-focusTile'}): reco_prods.append(tmp['data-product-id']) if len(reco_prods)==0: reco_prods=["Not available"] else: reco_prods=reco_prods WLMTData.append([codelist[i],h1ProductName.text,ivProductDesc.text,url2, liProductBreadcrumb_parent.text, liProductBreadcrumb_active.text, spanProductPrice.text, spanProductRating, spanProductRating_count,reco_prods]) except Exception as e: print (str(e)) # save final result as dataframe df=pd.DataFrame(WLMTData) df.columns = df.iloc[0] df=df.drop(df.index[0])

# Export dataframe to SQL import sqlalchemy database_username = 'ENTER USERNAME' database_password = 'ENTER USERNAME PASSWORD' database_ip = 'ENTER DATABASE IP' database_name = 'ENTER DATABASE NAME' database_connection = sqlalchemy.create_engine('mysql+mysqlconnector://{0}:{1}@{2}/{3}'. format(database_username, database_password, database_ip, base_name)) df.to_sql(con=database_connection, name='‘product_info’', if_exists='replace',flavor='mysql')

You may indeed add additional complexity to this system to make your scraper more customizable. For example, the scraper above handles missing data in properties such as description, price, and reviews. This information may be missing for a variety of reasons, including the product being sold out and out of stock, inaccurate data entry, or the product being too new to have any ratings or information at this time.

You’ll have to keep modifying your scraper to adapt to different site structures and maintain it effective when the webpage is refreshed. This Walmart Data scraper serves as a starting point for a Python scraper on Walmart.com.

Looking for Walmart Product Data Scraping Services using Python and BeautifulSoup?

Contact Scraping Intelligence now and request a quote!