Google Reviews are an invaluable source of real user feedback in the wide realm of internet information. It is imperative to take note of their feedback as it aids in the enhancement of your offerings. Consider it as receiving insider information from clients. You can identify what makes your consumers happy, address issues, and grow your business by reading these evaluations.

The power of Google SERP scraping lies in its ability to yield actionable insights, guide strategic decisions, and offer a nuanced understanding of the constantly changing landscape of customer preferences and experiences, irrespective of whether you're a data enthusiast navigating the digital world or a business owner trying to increase consumer happiness.

What is Google SERP?

The page that responds to a user's search query is the Google SERP or Search Engine Results Page. Google displays a page with a list of results that are pertinent to the search word or phrase you type into the search field. We refer to each item on this page as a search result.

Typically, the Google SERP consists of several components intended to give consumers a wide range of relevant information.

Here are some key components you might find on a typical Google SERP:

Natural Search Outcomes

These are the classic displays of web pages that Google's algorithm considers the most suitable for the search query. Each outcome typically features a title, a short description (meta description), and a URL.

Highlighted Summaries

Highlighted summaries are brief answers or summaries visible at the top of the search results, often taken directly from a webpage. Google picks these snippets to offer users quick and pertinent information.

Information Graph

On the right side of the Search Engine Results Page (SERP), you might see an Information Graph section displaying details relevant to the search query. This may include facts, data, and images of entities like people, places, or things.

Sponsored Advertisements

Paid advertisements, also identified as Google Ads, emerge at the SERP's top and bottom, labeled as "Ads." Advertisers compete for keywords, and their ads show to users with matching search queries.

Regional Collection

For queries related to locations, a Local Pack could show up, showcasing a map and a set of local business entries. Each business entry usually contains its name, location, contact number, and reviews.

Image and Video Outcomes

Depending on the nature of the search query, Google might present images or videos in the results, delivering users multimedia content.

Connected Searches

Towards the lower part of the SERP, you frequently encounter a section with related searches. These are additional queries associated with the initial search term, aiding users in further exploration.

Results of Organic Search

These are the standard listings of websites that Google believes are most pertinent to the query. A title, a brief explanation (meta description), and a URL are usually included with each result.

Highlighted Extracts

Featured snippets are brief responses or summaries taken straight from a web page and appear at the top of search results. Google chooses these snippets to give consumers rapid access to pertinent information.

Knowledge Chart

A Knowledge Graph panel that shows details about the search query may be located on the right side of the SERP. This might comprise information about entities like people, locations, objects, facts, statistics, and pictures.

What is Python Web Scraping?

Python web scraping is the process of using the Python programming language to extract information from websites.

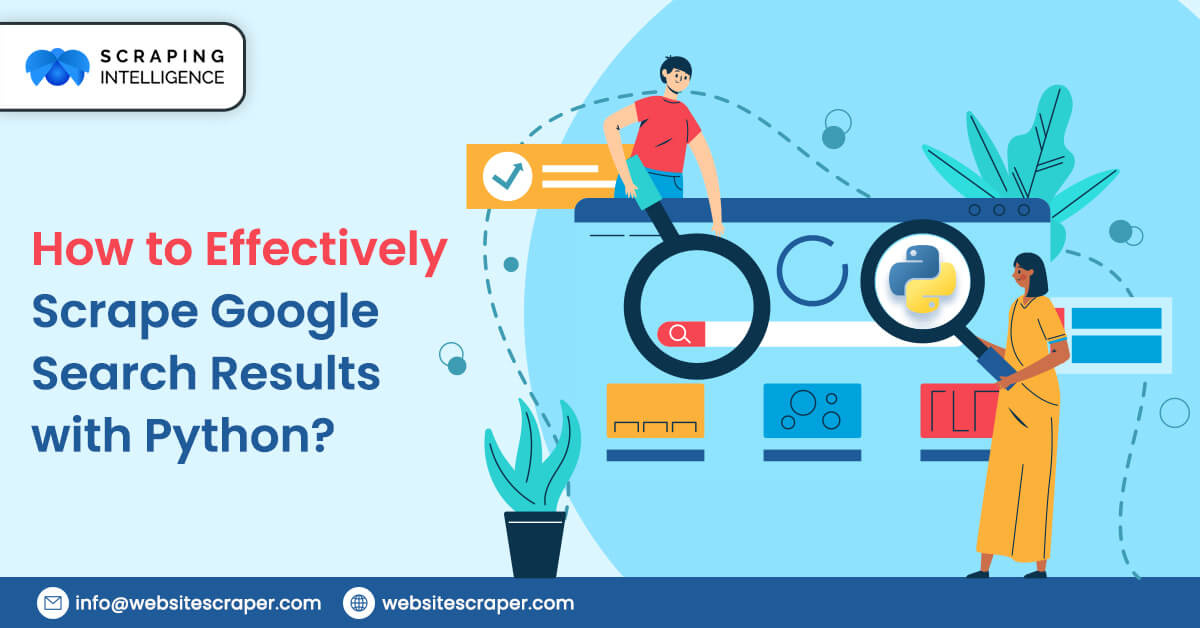

This involves writing code that can programmatically interact with web pages, fetch data, and then parse and extract relevant information for further analysis or storage. The key components of Python web scraping involve:

Getting Websites Requested

Sending HTTP requests to web servers is made more accessible by Python tools like requests. This makes it possible for the script to extract a web page's HTML content, which forms the basis for additional research.

HTML Parsing

After the HTML material is obtained, Python modules like BeautifulSoup or lxml are used. By helping to parse the HTML structure, these libraries facilitate navigating the HTML tree and locating certain components for extraction.

Data extraction

Web scraping programs can locate and retrieve the required data using the parsed HTML. This can include any material that is on the page, including text, photos, tables, links, and other sorts of information.

How to Extract Google Search Results Using Python

We will be scraping the Google search results by following the steps effectively. Here, we use BeautifulSoup, a Python library, to scrape SERP results and other required data.

Step 1Set up the required environment to initiate data scraping activities.

Step 2After establishing the coding environment, let's get started with the parsing and data extraction procedure from Google search results. The BeautifulSoup library will be used to parse and explore the HTML structure, and the requests library will be used to perform HTTP requests and obtain the HTML answer. Let's link the libraries and make a new file:

import requests from bs4 import BeautifulSoup

Let's set query headers in order to conceal our scraper and reduce the likelihood of blocking:

header={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36'}

It is preferable to provide the current headers in the request. For instance, you may utilize your own, which is located on the Network tab of DevTools. Next, we run the query and assign the result to a variable:

data = requests.get('https://www.google.com/search?q=cafe+in+new+york', headers=header)

We already have the page's code at this point, so we just need to parse it. Make a BeautifulSoup object, then parse the page's HTML code that results:

soup = BeautifulSoup(data.content, "html.parser")

Additionally, let's make a variable result where we will enter the elemental data:

results = []

Remember that every element in the data has a class of 'g,' so we can work with the elements individually. To put it another way, gather all the items with class 'g', go over each element individually, and extract the relevant info. Let's build a for loop to accomplish this:

for g in soup.find_all('div', {'class':'g'}):

Furthermore, if we examine the page's code closely, we can see that every element is a child of the <a> tag, which houses the page link. Using that, let's obtain the description, link, and title information. We'll include the possibility that the description is empty in the try except block.

if anchors:

link = anchors[0]['href']

title = g.find('h3').text

try:

description = g.find('div', {'data-sncf':'2'}).text

except Exception as e:

description = "-"

Add the elemental data to the results variable:

results.append(str(title)+";"+str(link)+';'+str(description))

We could now show the variable on the screen, but let's make things more difficult by storing the information in a file. To achieve this, create or replace a file containing columns "Title", "Link", "Description":

with open("serp.csv", "w") as f:

f.write("Title; Link; Description\n")

Now let's insert the information, line by line, from the results variable:

for result in results:

with open("serp.csv", "a", encoding="utf-8") as f:

f.write(str(result)+"\n")

Whole code a glance:

import requests

from bs4 import BeautifulSoup

url = 'https://www.google.com/search?q=cafe+in+new+york'

header={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36'}

data = requests.get(url, headers=header)

if data.status_code == 200:

soup = BeautifulSoup(data.content, "html.parser")

results = []

for g in soup.find_all('div', {'class':'g'}):

anchors = g.find_all('a')

if anchors:

link = anchors[0]['href']

title = g.find('h3').text

try:

description = g.find('div', {'data-sncf':'2'}).text

except Exception as e:

description = "-"

results.append(str(title)+";"+str(link)+';'+str(description))

with open("serp.csv", "w") as f:

f.write("Title; Link; Description\n")

for result in results:

with open("serp.csv", "a", encoding="utf-8") as f:

f.write(str(result)+"\n")

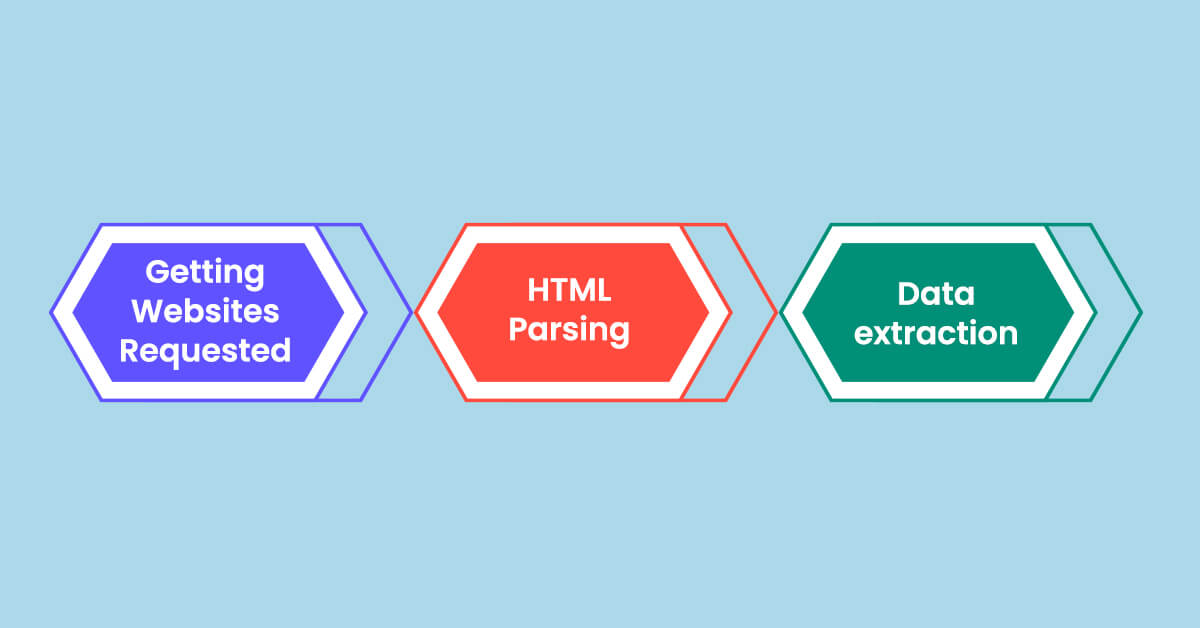

Difficulties in Scrape Google Search Results

Undoubtedly, there are difficulties with Google SERP Scraper because of the sophisticated algorithms and strict policies that Google uses to protect user privacy and guarantee fair use of its services. These challenges are complicated since Google is dedicated to preserving the integrity of its search engine.

Methods for Countering Scraping

Google employs advanced algorithms to spot and prevent automated scraping efforts. These systems examine a number of variables, such as request frequency and pattern, to detect and prevent suspicious activity.

Security using CAPTCHAs

To prevent automated bots, Google frequently employs CAPTCHAs, which stand for Completely Automated Public Turing Test to Tell Computers and Humans Apart. Scraping bots are discouraged by these challenges, which require human intervention to be overcome.

Setting Up Dynamic Pages

Depending on the user's location, search history, and type of query, Google's search results page layout is dynamic and subject to change. It takes time to adapt Google SERP scraping programs to these dynamic changes.

IP Blocking Techniques

Google monitors the volume and frequency of requests coming from particular IP addresses. It may be impossible to access search results again if an IP address is restricted either temporarily or permanently for engaging in excessive scraping.

Legal and ethical ramifications

Google's terms of service clearly specify limitations on automated access to the company's services. Without permission, Google SERP scrapers may face penalties or other legal repercussions.

Regular Algorithmic Revisions

In order to enhance relevancy and guard against manipulation, Google frequently modifies its search algorithms. Scraping tools need to be updated to reflect these algorithmic changes in order to continue being accurate and effective.

Quantity Limits on Results

For every search query, Google provides the option to return a maximum number of results. For scraping projects that aim to gather large datasets, this restriction might provide an issue.

Use Cases of Google SERP Scraping

Google Reviews data is extremely valuable for various industries. It provides businesses with important information to improve operations, refine their products/services, and position themselves strategically in the market.

Company Observations and Improvements

Google Reviews provide businesses with a direct line of communication with customers, allowing them to determine overall sentiment and identify areas in need of improvement. By addressing specific problems or enhancing features that customers find appealing, businesses can make data-driven decisions by examining review trends.

Monitoring the Business Reputation

By monitoring Google Reviews, businesses may communicate with customers directly, responding promptly to both positive and negative feedback and demonstrating their commitment to their satisfaction. Positive online reviews for scraping SERP results are a great method for a business to stand out from competitors in the eyes of potential customers.

Branding and Promotion

Positive remarks made about a company in reviews can be utilized in advertisements and other materials to highlight how much satisfied customers are with the good or service. As a result of seeing that other customers have had positive experiences, prospective customers are more likely to trust the company. Advertisements and promotions can highlight specific qualities of a product or service that buyers find particularly appealing. Businesses can showcase the features that set their product apart in this way and draw in new clients who might be interested in those features.

Local SEO Optimization

The location of a business is frequently mentioned in reviews left on Google. Employing these regional terms increases the company's online visibility in local searches. People in the area will be more likely to locate and select that business as a result. Positive evaluations from members of the same community make a business seem more reliable to other residents. Good reviews can persuade those in the vicinity to choose that company over competitors because they observe that their neighbors are happy with it.

Engagement of Consumers

Businesses give customers a sense of worth and attention when they reply to their feedback. Customers are more likely to remain devoted as a result of feeling a connection to the company. Companies might invite clients to submit evaluations as a way to express their opinions. This keeps clients interested and helps the company identify what they like and don't like. Saying "We want to hear from you!" is what it means.

Conclusion

Google SERP scraping with Python can yield significant information, but it is critical to proceed with caution. Developers may legally and morally extract information by following Google's terms of service and using the Google Places API. To guarantee a courteous and pleasant online platform experience, always ask permission first, be open and honest about your objectives, and prioritize compliance.

Innovation is supported by ethical behaviors, which are the cornerstones of a successful coding career. Scraping Intelligence uses an effective Google SERP scraper to extract the required data ethically while ensuring data security. Our developers assist in creating a positive impact in a collaborative and trustworthy digital environment by adopting appropriate data access practices.