The food delivery sector has experienced increases in innovations over time. The online food delivery market will bring a tremendous profit of US $ 0.84 trillion in the next year. However, with the rise of the food delivery market, it is getting more and more difficult for businesses to be noticed and, preferably, self-sustaining. The food service and delivery field keeps its nose to the grindstone, and the companies involved come up with new concepts supported by new technologies.

Key Statistics

Looking through all the data from competitor websites can be really hard and take a lot of time. Without the right tools, it's almost impossible to handle all that information. The online food delivery market is expected to grow a lot, reaching around $192 billion in sales by 2025, up from about $127 billion in 2021. With so many people using food delivery apps and hundreds of restaurants listed, the competition is really tough. To stay competitive, restaurants and food chains are using fancy tools to understand what their customers like. They're getting help from web data extraction services, which gather data from apps like UberEats, Zomato, and Swiggy. This helps them make better decisions about marketing and pricing. So, if you want to improve your restaurant or food delivery business, using these tools to extract data is the way to go.

What is Food Delivery Data Scraping?

Food delivery data scraping is the process by which data is automatically retrieved from food delivery websites using automated techniques. Such data can consist of anything from lunchtime menus to rates, customer feedback, delivery times, and a mix of dried fruits. Using web scraping approaches, developers can create scripts or programs that track visits to food delivery service sites, navigate through the site pages, and extract helpful data. Data mining makes this data primarily valuable for multiple perspectives, like building recommendation systems, analyzing market trends, or comparing prices of different restaurants.

Food delivery data scraping can help businesses make better decisions, improve user experience, and streamline operations within the food delivery sphere. Validly, scraping should be done in a way that does not violate the copyright policy of the target platforms and uses the data only for the designated purposes.

Data types

The information gathered from food delivery websites offers essential insights into trends in the market, how customers behave, and what strategies competitors are using.

Menu Items

This is like a list of all the foods available at a restaurant, including descriptions and prices. Think of it as a menu you can browse online.

Prices

This is the cost of each food item, including any extra fees like delivery charges or taxes.

Delivery Times

This tells you how long it will take for your food to arrive after you place an order. It depends on where you live and how busy the restaurant is.

Restaurant Details

This includes information about the restaurant, like its name, address, and phone number, so you know where to find it and how to contact them.

Customer Reviews

These are comments and ratings from people who have ordered from the restaurant before. They share their reviews, such as the taste of the food, how fast it was delivered, and whether they liked it or not.

Promotions and Discounts

Information on any special offers, discounts, or promotions available at specific restaurants or for certain menu items. This data helps attract customers and incentivize them to place orders, especially during promotional periods or festive seasons.

Availability

This tells you which foods are currently available to order and if any items are not available right now. It helps you choose what to order without being disappointed.

Restaurant Ratings

These are overall scores given to the restaurant based on customer reviews. Higher ratings usually mean better quality and service.

Order History

This shows a list of all the orders you've placed in the past, including what you ordered, how much you paid, and whether it was delivered.

User Preferences

This is information about your personal preferences, such as your favorite restaurants or the types of food you like to order. It helps the website recommend foods you might enjoy based on your past orders.

The Importance of Web Scraping for Your Food Delivery Business

Web scraping helps food delivery businesses to extract diverse datasets. By studying this information, businesses can make smarter choices, improve their products and services, and keep up with the fast-changing food delivery industry.

Understanding the Market

Web scraping allows you to collect essential data from your competitors' websites, such as what services they offer, how much they charge when they deliver food, and whether they have positive feedback from the clients. Here, you can do the research to know what customers fancy, what the bestselling markets are, and what to fill in the gaps. It is equally important to monitor the demand to ensure your business provides customers with what they need.

Keeping an Eye on Prices and Competitors

Using web scrapping, you can follow up-to-date prices while keeping an eye on your competitors. This helps you customize prices to your liking to compete with your rivals and increase your public. Moreover, when you know what promotions and special deals your rival companies offer, you can find the best marketing strategy to outmatch your competitors and attract the attention of consumers.

Improving Your Menu

Through web scraping, you can go through the menus of various restaurants to find which dishes are in demand, and what popular and recent trends in food selection there are. This keeps your menu balanced in such a way that you can seek feedback on your dishes from your customers after you go live. You should be able to restock your shelves with items according to the taste of your customers. At the same time, you can remove items that are not appealing to any individual, making your menu more captivating to all your target customers.

Making Customers Happier

Through precise readings of the reviews and feedback from various websites, you will know what the client appreciates about your business and what challenges your competitors have. This will, in turn, allow you to know what areas need your focus and what you need to change to offer a better experience to customers. No matter the type of food quality, speed of delivery, or excellent customer service, using web scraping allows you to form decisions that will make your clients happier.

Working Smarter, Not Harder

Using web scraping to collect data saves you a ton of time and effort compared to doing it manually. Instead of spending hours gathering and analyzing data, web scraping lets you get all the info you need quickly and easily. This gives you more time to concentrate on other crucial aspects of running a food delivery company, such as marketing, customer service, and idea generation.

Best Practices on How to Extract Food Delivery Data?

Respect Website Policies

Take the time to read and understand the terms of service and scraping policies of the websites you're scraping. These policies outline the rules and restrictions for accessing and using their data. Adhering to these policies not only ensures legal compliance but also fosters a positive relationship with the website owners.

Limit Requests

Sending too many requests to a website in a short period can overwhelm its servers and may lead to your IP address being blocked. Implement rate limiting and delay mechanisms in your scraping script to control the frequency of requests and prevent overloading the server.

Use Ethical Scraping

Stick to scraping data that is publicly available and avoid accessing restricted or private information. Additionally, copyright laws and intellectual property rights must be respected by obtaining permission or using data by fair use principles.

Handle Errors Gracefully

Errors can occur during the scraping process for various reasons, such as network issues or changes in website structure. Implement error handling mechanisms in your script to gracefully handle errors, retry failed requests, and log errors for troubleshooting purposes.

Monitor Performance

Monitor response times, success rates, and data quality to monitor your scraping script's performance. Then, review and update your script regularly to adapt to changes in website structure or data format.

Respect Robots.txt

A website's robots.txt file instructs web crawlers about which pages or sections are allowed or disallowed for crawling. Check the robots.txt file of the target website to see if scraping is allowed or restricted for specific areas and abide by the directives outlined in the file.

Use Proxy Servers

Use proxy servers or rotate IP addresses to avoid IP blocking and distribute scraping requests across multiple IP addresses. This helps maintain anonymity and reduces the risk of detection by the target website.

Stay Updated

Keep yourself informed about advancements in web scraping techniques, tools, and best practices. Subscribe to relevant forums, communities, or newsletters to stay updated on the latest developments and adapt your scraping approach accordingly.

Legal Compliance

Ensure that your scraping activities comply with local laws and regulations, including data protection and privacy laws. If your scraping involves personal data, obtain consent from users and handle data responsibly according to applicable laws.

Be Transparent

If you're using scraped data for commercial purposes or incorporating it into a public-facing application, be transparent about your data collection practices. Clearly communicate to users how their data will be used and provide options for opting out if desired.

How Does Scraping Intelligence Help Scrape Food Delivery Data?

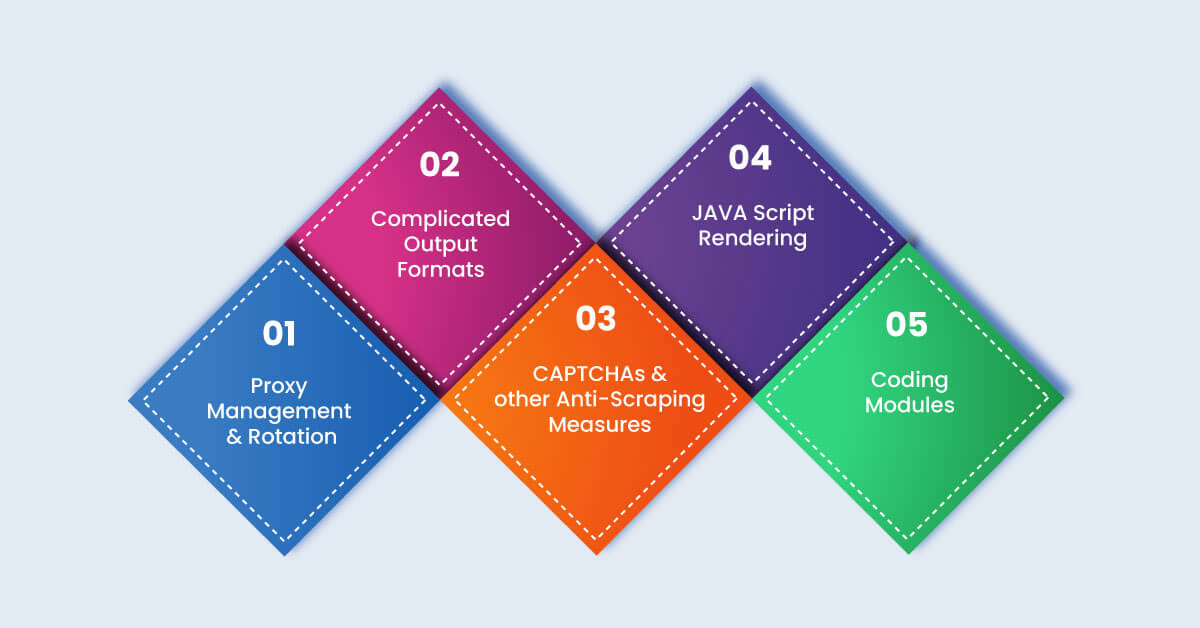

Scraping Intelligence helps food delivery businesses to grow and expand in the competitive market. There are various ways in which it satisfies business requirements:

Proxy Management and Rotation

Proxy management refers to using proxy servers to hide your IP address when scraping data from websites. It involves configuring your scraping tool to rotate through different proxy servers, preventing the target website from blocking your IP address and allowing you to scrape data without interruption.

Complicated Output Formats

Sometimes, the data retrieved from websites needs to be in simpler or easier-to-read forms, making it difficult to evaluate or use efficiently. Dealing with complex output formats entails translating the data into a more organized and understandable format, such as CSV, JSON, or Excel, to facilitate analysis and modification.

CAPTCHAs and Other Anti-Scraping Measures

Websites often employ CAPTCHAs (Completely Automated Public Turing test to tell Computers and Humans Apart) and other measures to prevent automated scraping. These measures can include requiring users to solve puzzles or enter text verification codes before accessing certain pages. Dealing with CAPTCHAs and anti-scraping measures may involve implementing CAPTCHA-solving services or using techniques to bypass or circumvent these measures.

JavaScript Rendering

Many modern websites use JavaScript to dynamically load content and interact with users. It's crucial to make sure the scraping tool can render and run JavaScript code in order to get all the data while scraping these kinds of websites. Using custom scraping software or libraries like Selenium or Puppeteer that provide JavaScript rendering may be necessary for this.

Coding Modules

Coding modules refer to the libraries, frameworks, or packages used to build scraping scripts or applications. These modules provide pre-written code and functions for activities such as parsing HTML, processing cookies, and establishing and maintaining sessions. Utilizing code modules, building scraping tools, and resolving typical scraping issues may be made simpler.

Conclusion

As far as the number of people, the only way out will be to buy a scraping service in the form of Scraping Intelligence which will be more beneficial. Scraping intelligence implies that companies don't need to search for qualified development teams or acquire sophisticated programming language skills. Based on its ease of implementation, which can be attained within a few minutes, scraping intelligence can be installed with minimal difficulty. The documentation also gives detailed walking instructions, thus making assimilation fast and helping one to unleash the many possibilities inherent in it.

Incredible Solutions After Consultation

- → Industry Specific Expert Opinion

- → Assistance in Data-Driven Decision Making

- → Insights Through Data Analysis