In today's world, data from online products is really valuable for businesses. It helps with market studies, comparing with competitors, and setting prices. However, manually collecting this data from so many websites can take time and effort. That's why we have web scraping software.

Web scraping software is an automated tool that collects desired or specific information from webpages like product information, review sites, social media and other sources. It can organise the data in structured formats. Whether you are a small business or a big enterprise, the right web scraping tool can make your data collection much quicker and help you find essential trends. Here's a list of the top web scraping tools you can use in 2024, along with their unique features and benefits.

Scraping Intelligence

Scraping Intelligence specializes in collecting data from websites. It aims to provide easy-to-use, top-quality, publicly available data. Its tools are designed to help all kinds of businesses reach their goals by gathering web data for them.

Features

- Competitive Insights

- Data Targeting and Filtering

- User-Friendly Interface

- Industry-specific scraping

- Advanced features

Advantages

- Structured Data

- Efficiency Boost

- Free Trial

- Customer Support

- Data Export Options

Octoparse

Octoparse is a user-friendly, cloud-based software that empowers people and businesses to collect product data and enhance their operations. Its no-code interface is designed with beginners and those without programming expertise.

Features

- Point-and-click interface

- Pre-built templates for popular websites (Amazon, eBay, etc.).

- Cloud-based scraping

- Data extraction from various formats (HTML, JSON).

- Export data in various formats (CSV, Excel, JSON).

Advantages

- Perfect for beginners with its intuitive interface.

- Pre-built templates save time and effort.

- Cloud-based scraping eliminates local hardware limitations.

- Supports a wide range of data formats.

Scrapy

Scrapy is a powerful and versatile open-source web scraping framework written in Python. Unlike some user-friendly point-and-click options, Scrapy lets you customize through Python scripting. This is perfect for people who are good at programming and want to manage how they collect data in a detailed way.

Features

- Strong and adaptable tool for complex data collection tasks.

- Extensive options for customization with Python coding.

- Large groups and communities who can help and teach.

- Support for managing content and sign-in requirements.

Advantages

- Highly customizable for experienced programmers.

- Since it's free to use, it's easy on the pocket.

- Community of users who can share useful advice and help.

- It helps you manage tricky data collection situations with its support.

ParseHub

ParseHub is a tool that lets anyone, regardless of their tech skills, scrape important information from websites. It can be a valuable tool for freelancers and consultants who need to collect client data.

Features

- Effortless Point-and-Click Interface

- Built-in Functionality for Common Challenges

- Cloud-Based Scraping

- Multiple Data Export Formats

- Paid plans offer API access

Advantages

- Beginner-Friendly

- Free Plan for Basic Needs

- Community Support

- Cloud-Based Convenience

- Handles Common Scraping Challenges

Oxylabs

Oxylabs offers a suite of tools under Scraper APIs that leverage its robust proxy infrastructure to tackle web scraping challenges. Oxylabs caters to businesses and developers who need a robust and scalable solution for web scraping.

Features

- Focus on Speed and Efficiency

- Multiple API Options

- Headless Browser Support

- Customizable Parameters

- Patented Proxy Rotator

- Real-time Data Delivery

Advantages

- Bypass website blocks

- Handle complex websites

- Superior Geo-Targeting Capabilities

- Efficient Proxy Management

- User-friendly interfaces and require minimal coding knowledge.

Webscraper.io

Webscraper.io has two ways to help you collect data from websites, whether you're a beginner or more advanced. Beginners can use a simple Chrome extension to click and choose what data they want. Advanced users have Web Scraper Cloud, which has more powerful tools for advanced data collection needs.

Features

- Export data in various formats (CSV, Excel)

- JavaScript Execution

- Dynamic Content Handling

- Pagination Handling

- Scheduling and Automation

- Integrate with Other Tools

Advantages

- Two Solutions for Different Needs

- Focus on Modern Websites

- Data Export Flexibility

- Scalability

Apify

Apify is a flexible tool for collecting data from websites that works well for both beginners and advanced users. It is cloud-based online, has different data collection tools, and has a large community of users. This makes it a strong tool for getting important product information from the internet.

Features

- Visual Web Scraper Builder for beginners

- Puppeteer Scraper for experienced users

- Cloud-Based Infrastructure

- Integration with Cloud Services

- Large Community and Marketplace

- Project Management

Advantages

- Advanced Capabilities - Suitable for All Skill Levels

- Scalable Cloud Infrastructure

- Project Flexibility

- Streamlined Workflows

- Active Community and Resources

Bright Data

Bright Data does more than collect data from websites. It also provides tools for protecting the data you collect. This powerful suite is helpful for anyone who needs access to important information on the web.

Features

- Proxy Infrastructure

- Unlocker to tackle anti-bot measures

- Scraping Browser

- Web Scraper IDE

- Automated Session Management

- Scalability for Large Projects

Advantages

- Advanced unblocking capabilities

- Pre-built Datasets

- Flexibility

- Scalability and Reliability

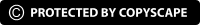

How Web Scraping Software Helps in Product Data Extraction?

Web scraping software is a helpful tool for businesses and people who want to collect web information quickly. Here's what it does:

Automated Data Collection

Gathering product information by visiting many websites can be time-consuming. Web scraping software does this job for you automatically, which saves a bunch of time and effort.

Comprehensive Data Extraction

Apart from the basic details about a product, scraping tools can also gather details like customer reviews, star ratings, how the price has changed over time, and more. This means you get a complete set of data to examine and learn from.

Competitive Analysis

Businesses can learn a lot about what's popular and what customers like by collecting information on what their competitors are selling, how much they charge, and how well their products are doing.

Price Monitoring

Online shops can change prices based on real-time information they collect about their competitors' prices. They can also stay one step ahead by monitoring deals and discounts their competitors offer.

Improving SEO and Market Presence

By examining how products are discussed and reviewed, businesses can devise better ways to appear in search results. Knowing what people like or don't like about products can help them create better ads or posts by answering common questions or highlighting what's great about their products.

Customizable and Scalable

Web scraping software can be set up to pick out just the information that a business is interested in. It can gather more or less info when needed, and it works great for all kinds of businesses, no matter how big the job is.

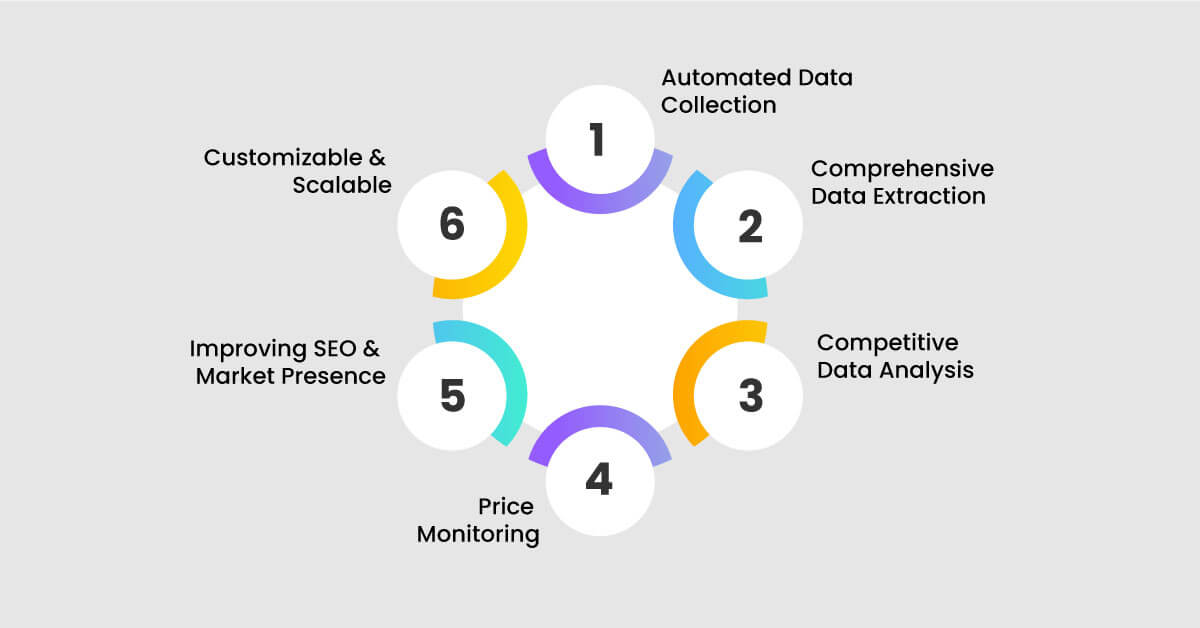

Considerations While Choosing a Web Scraping Tool

When selecting a web scraping tool, there are several factors to consider, ensuring you choose the best one for your needs. Here's a comprehensive list based on the summarized insights from your search results:

Technical Expertise

If you're new to web scraping, prioritize user-friendly interfaces with point-and-click functionality (e.g., Octoparse, ParseHub). For experienced users, tools with scripting capabilities like Python libraries (Beautiful Soup) or frameworks (Scrapy) offer greater control and customization.

Website Complexity

For simpler websites with static content, most scraping tools will suffice. Websites that heavily rely on JavaScript or other dynamic content require tools that can handle these complexities (e.g., Apify, Scrapy).

Data Needs

Identify the exact data points you need (product names, prices, reviews). Choose a tool that allows you to target and extract specific data. If you need to extract vast amounts of data, consider cloud-based tools (Apify, Octoparse) that offer scalability for handling high volumes.

Data Output Format

Ensure the tool exports data in formats you can work with, such as CSV, Excel, or JSON. Most scraping tools offer various export options. If you plan to integrate scraped data with other tools (BI platforms), look for software with compatible output formats or built-in connectors (e.g.,Scraping Intelligence).

Ethical Considerations

Always adhere to website robots.txt guidelines and terms of service. Responsible scraping avoids overloading servers or violating website policies. Focus on publicly available data and prioritize tools that emphasize ethical scraping practices.

Pricing and Scalability

Many scraping tools offer free plans or trial periods to test their functionalities before committing. Subscription-based pricing often scales based on data volume or features. Choose a plan that aligns with your scraping needs and budget.

Customer Support

Look for tools with comprehensive documentation, tutorials, and active communities for troubleshooting and learning. Reliable customer support is crucial, especially for complex scraping tasks or encountering technical issues.

Reasons to Choose a Web Scraping Software

Look for tools with comprehensive documentation, tutorials, and active communities for troubleshooting and learning. Reliable customer support is crucial, especially for complex scraping tasks or encountering technical issues.

It's Fast and Saves Time

Web scraping tools can quickly collect large volumes of data from web pages, review sites, social media platforms and other sources, way faster than a person could.

Accuracy

When people collect information manually, they can make mistakes, especially if it's a repetitive task. A web scraper doesn't make those mistakes, so the information it collects is accurate and trustworthy.

Data Handling

The software can put the information it collects in spreadsheets, so you can start working with it right away. Some tools can even tidy up the information as they collect it to make sure it's just how you need it.

Integration

Once you have your data, you can easily move it into other programs you use. This could be for further analysis, making graphs, or feeding into other business tools that help you make decisions automatically.

Conclusion

Choosing the right web scraping software depends on your technical expertise and project needs. For beginners, user-friendly options like Scraping Intelligence are great. If you're comfortable with coding, Scrapy offers extensive control and customization. For large-scale operations, Apify or Import.io provide robust features. Remember, respect the terms of service of any website you scrape and prioritize ethical data collection. Always respect robots.txt guidelines and terms of service to avoid any issues. With the right tool, web scraping can be a powerful asset for market research, price tracking, and more.

Incredible Solutions After Consultation

- → Industry Specific Expert Opinion

- → Assistance in Data-Driven Decision Making

- → Insights Through Data Analysis