Imagine a dedicated researcher looking tirelessly for the right information you need from the ocean of information available on the internet. That's what a web crawler does! These web crawlers, also known as web spiders, crawl through the web pages and collect the relevant information when a search query is made. Though they operate behind the scenes, they shed light on the business strategies. But how do they work, and why are they so crucial for businesses? In this blog, we will understand how they work their magic and how they can be a game-changer for businesses of all sizes and types. Worry not; you don't have to be a tech whizz to understand this; we will break it down for you into understandable pieces.

What is a Web Crawler?

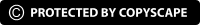

Web crawling is an automated process of systematically browsing the internet, visiting websites, downloading content, and following links to discover more websites using the software called a "web crawler" or a "bot". The primary purpose of web crawling is to index the content of websites on the internet so they can be retrieved and displayed when someone searches for them.

A web crawler or a spiderbot, is an internet bot that systematically navigates through the internet for web indexing. That means it scans websites to index all the visited pages which is later processed by the search engine, and download pages for quick and accurate searches.

In simple terms, a web crawler is like a librarian cataloging the books available in the library. By cataloging the entire library, the librarian ensures that visitors find exactly what they're looking for when visiting the library.

Unlike a library, the internet does not have a physical pile of books, which makes it even harder to index as most of the data can be overlooked for various reasons. There are billions of web pages available on the internet; a web crawler starts by visiting the known web pages, then visits the pages following the hyperlinks, and then the process continues.

How Web Crawler Works?

The internet is continuously evolving, and it is impossible to keep track of the total number of web pages. It starts with a list of seed URLs and visits to each website. Upon arrival, it reads the website content and extracts links within the pages. The crawler then follows those links to discover additional pages. This continuous process allows a web crawler to index the content of various web pages on the internet. When a web crawler visits a webpage, it collects data about the page and stores this information in an index or database. This process transforms the map into a searchable library, allowing search engines to quickly find and rank relevant websites for user queries.

Web crawlers do follow specific policies and standards while crawling through the internet. They select which page to crawl, the pages' order, and the crawling frequency to check on the updated content.

Why Content Relevance is Importance?

To ensure an accurate search result, the web crawler prioritizes relevant information directed towards pages likely to contain content valuable to users and the search engine's index. This prevents them from getting bogged down in irrelevant or low-quality websites, improving efficiency and speed.

Websites with consistently relevant content are rewarded by search engines with higher rankings in search results. This motivates website owners to create high-quality content, further improving the overall quality of the indexed information.

Why do Web Crawlers Revisit Web Pages?

Websites constantly change — new content gets added, old material is removed, and links are updated. In response, web crawlers are continually at work to keep up with these changes and maintain an accurate and up-to-date index of the web. They track swings in product catalogs, reviews, and location details, which can all change rapidly. By revisiting pages, crawlers ensure that the search results reflect the most current information.

Regular site visits from crawlers indicate a website is active, offering it a better opportunity to rank higher in search results. So, web crawlers are always on duty, returning to pages to detect changes and update their databases effectively. This proactive approach helps prevent users from encountering broken links or outdated information.

Examples of Web Crawlers

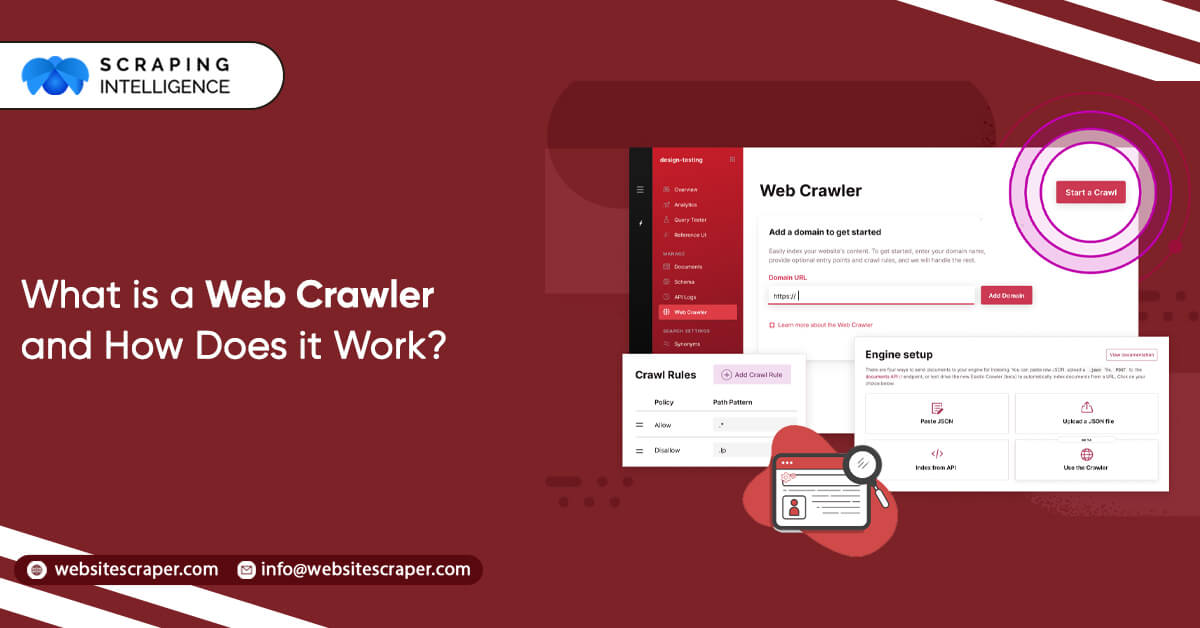

Every search engine has its own web crawler designed for a specific purpose. Here are some of the noteworthy web crawlers.

- Googlebot - The most well-known crawler, responsible for indexing the vast majority of the web for Google Search. It's the tireless worker behind Google's search results.

- Bingbot - This is Microsoft's web crawler used for the Bing search engine. It works similarly to Googlebot, crawling the web to build the Bing search index.

- Yandex Bot - Yandex, a popular search engine in Russia, operates this web crawler. Like Googlebot and Bingbot, it's designed to discover and index new and updated pages.

- DuckDuckBot - DuckDuckBot is the web crawler for DuckDuckGo, a privacy-focused search engine. It helps the search engine provide users with relevant search results.

- Amazon Bot - Amazon Bot is used to monitor product prices and availability across the web, ensuring its listings stay competitive and up-to-date.

- Yahoo Slurp - Yahoo Slurp is the web crawler utilized by Yahoo's search engine. It plays a crucial role in scanning and indexing web page information to enhance Yahoo Search results.

Difference between Web Crawling and Web Scraping

While web crawlers and web scrapers are often mistakenly used interchangeably, they have distinct roles and objectives in the online world. Here's a breakdown of their key differences:

| Aspect | Web Crawling | Web Scraping |

|---|---|---|

| Purpose | Focuses on discovering and indexing web pages. It's like building a comprehensive map of the internet, where every page is a location and links are the connecting roads. Crawlers navigate this map, collecting basic information about each page to create a searchable index. | Web scrapers aims to extract specific data from web pages. It's like cherry-picking valuable information like product prices, customer reviews, or social media posts from the vast landscape of the internet. |

| Method | Follows hyperlinks on websites to discover new pages, similar to how a spider spins its web. It analyzes links, prioritizes them based on various factors, and then decides which pages to visit next. | Web scrapers often bypasses links and directly accesses specific elements within a web page, like tables, forms, or specific text sections. It may use pre-defined patterns or tools to identify and extract the desired data efficiently. |

| Output | Creates a structured index of web pages, often used by search engines to provide relevant results for user queries. The information crawled may include page titles, keywords, and basic content metadata. | Web scrapers extract specific data points in various formats like text, numbers, or images. This data can be used for various purposes like price comparison, market research, sentiment analysis, or building datasets. |

| Ethical Considerations | Generally less concerning as it usually focuses on publicly accessible information and prioritizes respecting robots.txt limitations. | Can raise ethical concerns if it scrapes data without permission or violates robots.txt restrictions. |

| Examples | Googlebot, Bingbot, Yandex Bot (used by major search engines) | Extracting product prices from online stores, gathering customer reviews from social media platforms, scraping competitor information for market research |

Why Web Crawlers are Important for SEO?

Web crawlers, which you can create using Python, are like the helpers of the internet. They surf the web, finding new website content and updating old ones. They judge each page on how good the content is, keyword usage, and link quality - this helps decide how high it should appear when there is a search query. They ensure that only the best and newest web pages are shown to searchers. Web crawlers also spot problems, like broken links or slow websites, that could stop a page from appearing in search results. If these web crawlers don't check out your website, it won't appear when people search. So, they're truly a must-have for good SEO.

How Can Businesses Use Web Crawler?

While crawling through the web, these web crawlers come across a lot of information that helps businesses grow. Here are some advantages of web crawlers.

Market Trends

With web crawler Python, businesses can write a special instruction that automatically looks through competitors' websites to gather important information. This data can include product prices, customer feedback, and marketing strategies. Python makes this easier because it has a lot of helpful tools and can handle even complicated websites well.

SEO Optimization

Web crawlers can help companies evaluate their websites for search engine optimization (SEO) performance. This includes identifying broken links, analyzing site structure, or finding duplicate content.

Social Media Monitoring

Web crawlers can track mentions of your business across social media and the internet, giving you a real-time understanding of your customers' sentiments and opinions.

Data Integration

Web crawlers can collect data from multiple sources and generate valuable insights, aiding business intelligence and decision-making.

Conclusion

Search engines aim to deliver quality, up-to-date information to users. By understanding how web crawlers and tools like web scrapers function, you can use their abilities to improve your website and get a leg up on your competitors. They assist in enhancing your website's visibility in search results, making it easier for online users to find you. As technology continues to evolve, the possibilities with web crawlers and web scrapers are endless. They represent a wealth of potential for businesses and individuals alike, allowing for continued success in the rapidly changing world of the internet. Scraping Intelligence provides the best web scraping services that fit the requirements of businesses of all sizes. Web scraping allows companies to convert unorganized data from the internet into structured information that their platforms can use.